Analysing the Supply and Demand sides of Big Data

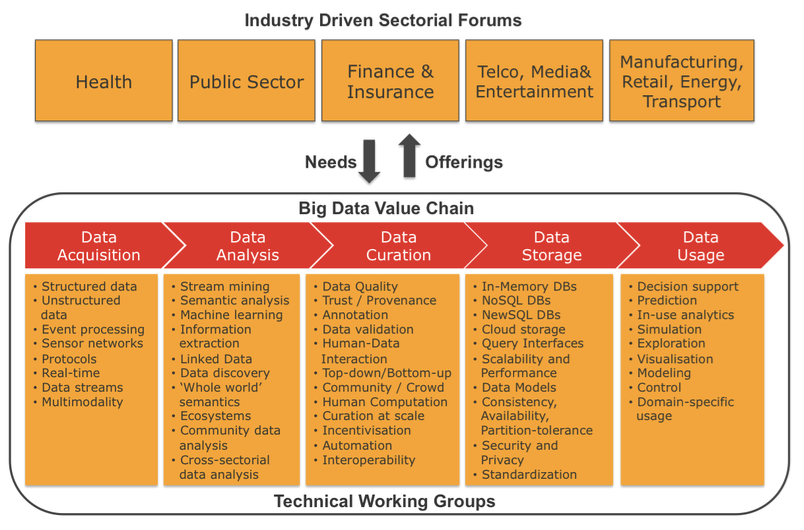

As illustrated above the sectorial forums and the technical working groups performed a parallel investigation in order to identify:

- Sectorial needs and requirements gathered from different stakeholders and

- The state-of-the art of big data technologies as well as identifying research challenges.

As part of the investigation, application sectors expressed their needs with respect to the technology as well as possible limitations and expectations regarding its current and future deployment.

Using the results of the investigation a gap analysis was performed between what technology capability was ready, with the sectorial expectations of what technological capability was currently required together with future requirements. The analysis produced a series of consensus-reflecting sectorial roadmaps that defined priorities and actions to guide further steps in big data research.

Technical Working Groups

The goal of the technical working groups was to investigate the state-of-the art in big data technologies to determine its level of maturity, clarity, understandability, and suitability for implementation. To allow for an extensive investigation and detailed mapping of developments, the technical working groups deployed a combination of a top-down and bottom-up approach, with a focus on the latter. The approach of the working groups was based on a 4-step approach: 1) literature research, 2) subject matter expert interviews, 3) stakeholder workshops, and 4) technical survey.

In the first step each technical working group performed a systematic literature review based on the following activities:

- Identification of relevant type and sources of information

- Analysis of key information in each source

- Identification of key topics for each technical working group

- Identification of the key subject matter experts for each topic as potential interview candidates

- Synthesizing the key message of each data source into state-of-the art descriptions for each identified topic

The experts within the consortium outlined the initial starting points for each technical area, the topics were expanded through the literature search and from the subject matter expert interviews.

The following types of data sources were used: scientific papers published in workshops, symposia, conferences, journals and magazines, company white papers, technology vendor websites, open source projects, online magazines, analysts’ data, web blogs, other online sources and interviews conducted by the BIG consortium. The groups focused on sources that mention concrete technologies and analysed them with respect to their values and benefits.

The synthesis step compared the key messages and extracted agreed views that were then summarized in the technical white papers. Topics were prioritized based on the degree to which they are able to address business needs as identified by the sectorial forum working groups.

The literature survey was complemented with a series of interviews with subject-matter experts for relevant topic areas. Subject matter expert interviews are a technique well suited to data collection, and particularly for exploratory research because it allows expansive discussions that illuminate factors of importance (Oppenheim, 1992) (Yin, 2009). The information gathered is likely to be more accurate than information collected by other methods since the interviewer can avoid inaccurate or incomplete answers by explaining the questions to the interviewee (Oppenheim, 1992).

The interviews followed a semi-structured protocol. The topics of the interview covered different aspects of big data, with a focus on:

- Goals of big data technology

- Beneficiaries of big data technology

- Drivers and barriers for big data technologies

- Technology and standards for big data technologies

An initial set of interviewees was identified from the literature survey, contacts within the consortium, and a wider search of the big data ecosystem. Interviewees were selected to be representative of the different stakeholders within the big data ecosystem. The selection of interviewees covered 1) established providers of big data technology (typically MNCs), 2) innovative sectorial players who are successful at leveraging big data, 3) new and emerging SMEs in the big data space, and 4) world leading academic authorities in technical areas related to the Big Data Value Chain.

Sectorial Forums

The overall objective of the sectorial forums was to acquire a deep understanding of how big data technology can be used in the various industrial sectors, such as healthcare, public, finance and insurance, and media.

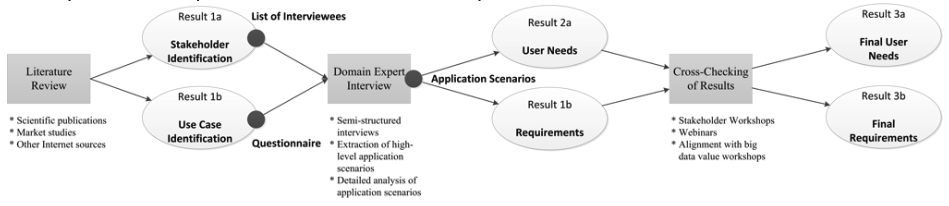

In order to identify the user needs and industrial requisites of each domain, the sectorial forums followed a research methodology encompassing the following three steps as illustrated below. For each industrial sector, the steps were accomplished separately. However, in the case where sectors were related (such as Energy and Transport) the results have been merged for those sectors in order to highlight differences and similarities.

The aim of the first steps was to identify both stakeholders and use cases for big data applications within the different sectors. Therefore a survey was conducted including scientific reviews, market studies and other Internet sources. This knowledge allowed the sectorial forums to identify and select potential interviews partnersand guided the development of the questionnaire for the domain expert interviews.

The questionnaire consisted of up to 12 questions that were clustered into three parts:

- Direct inquiry of specific user needs

- Indirect evaluation of user needs by discussing the relevance of the use cases identified at Step 1 as well as any other big data applications of which they were aware

- Reviewing constraints that need to be addressed in order to foster the implementation of big data applications in each sector.

In the second step, semi-structured interviews were conducted using the developed questionnaire. At least one representative of each stakeholder group identified in Step 1 was interviewed. To derive the user needs from the collected material, the most relevant and frequently mentioned use cases were aggregated into high level application scenarios. The data collection and analysis strategy was inspired by the triangulation approach (Flick 2011). Reviewing and quantitatively assessing the high-level application scenarios derived a reliable analysis of user needs. Examinations of the likely constraints of big data applications helped to identify the relevant requirements that needed to be addressed.

The third step involved a crosscheck and validation of the initial results of the first two steps by involving stakeholders of the domain. Some sectors conducted dedicated workshops and webinars with industrial stakeholders to discuss and review the outcomes. The results of the workshops were studied and integrated whenever appropriate.

Cross-Sectorial Roadmapping

Comparison among the different sectors enabled the identification of commonalities and differences at multiple levels, including technical, policy, business, and regulatory. The analysis was used to define an integrated cross-sectorial roadmap that provides a coherent holistic view of the big data domain. The cross-sectorial big data roadmap was defined using the following 3 steps:

- Consolidation to establish a common understanding of requirements as well as technology descriptions and terms used across domains

- Mapping to identify any technologies needed to address the identified cross-sector requirements

- Temporal alignment to highlight which technologies need to be available at what point in time by incorporating the estimated adoption rate by the involved stakeholders.

Excerpt from: Curry, E. et al. (2016) ‘The BIG Project’, in Cavanillas, J. M., Curry, E., and Wahlster, W. (eds) New Horizons for a Data-Driven Economy: A Roadmap for Usage and Exploitation of Big Data in Europe. Springer International Publishing. doi: 10.1007/978-3-319-21569-3_2.

References

- Flik, U. (2004) ‘Triangulation in Qualitative Research’, in A Companion to Qualitative Research, p. 432.

- Oppenheim, A. N. (1992) Questionnaire Design, Interviewing and Attitude Measurement, Journal of Marketing Research. Edited by P. P. L. N. Ed. Continuum. Available at: http://www.amazon.com/Questionnaire-Design-Interviewing-Attitude-Measurement/dp/1855670445.

- Yin, R. K. (2009) Case Study Research: Design and Methods, Essential guide to qualitative methods in organizational research. Edited by L. Bickman and D. J. Rog. Sage Publications (Applied Social Research Methods Series). doi: 10.1097/FCH.0b013e31822dda9e.